Motion Graphics

Build by Tochdesigner

01-25-2022

︎ Touchdesigner

︎ Generative Art

︎ Creative Coding

︎ Audio Visual

Mapping the sound in NYC

Summary

- Step 1 Coding Issues1 Particle 2D

- Step 2 Coding Issues2 3D Particle, Colors, and Textures

- Step 3 City Radio

- Step 4 Communicator( Data Parsing & Controls)

- Step 5 Sonic City, New York

- Step 6 Final Presentation Audio-Visual

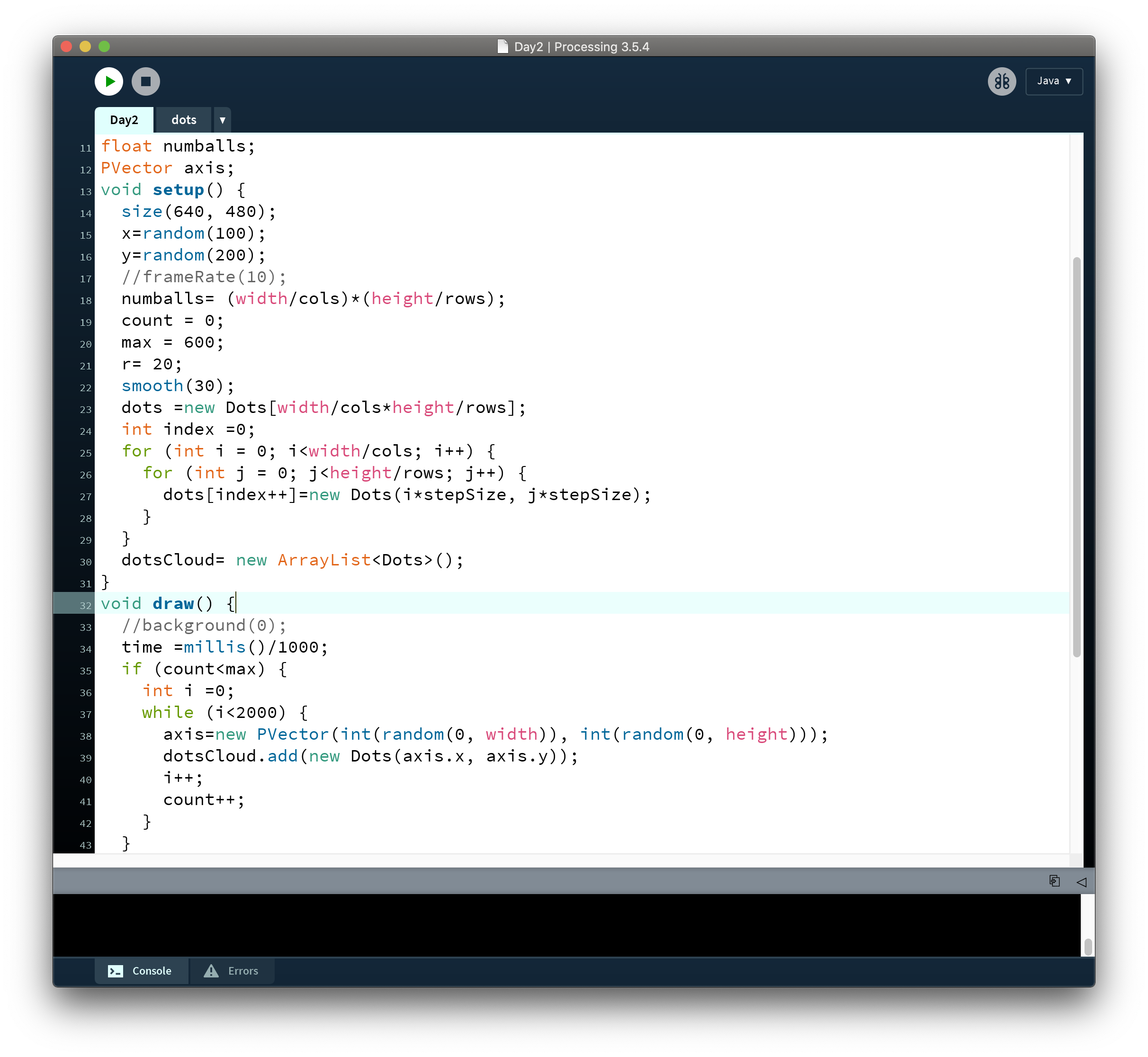

Step1 Coding Issues1 in Particle 2D

Processing

![]()

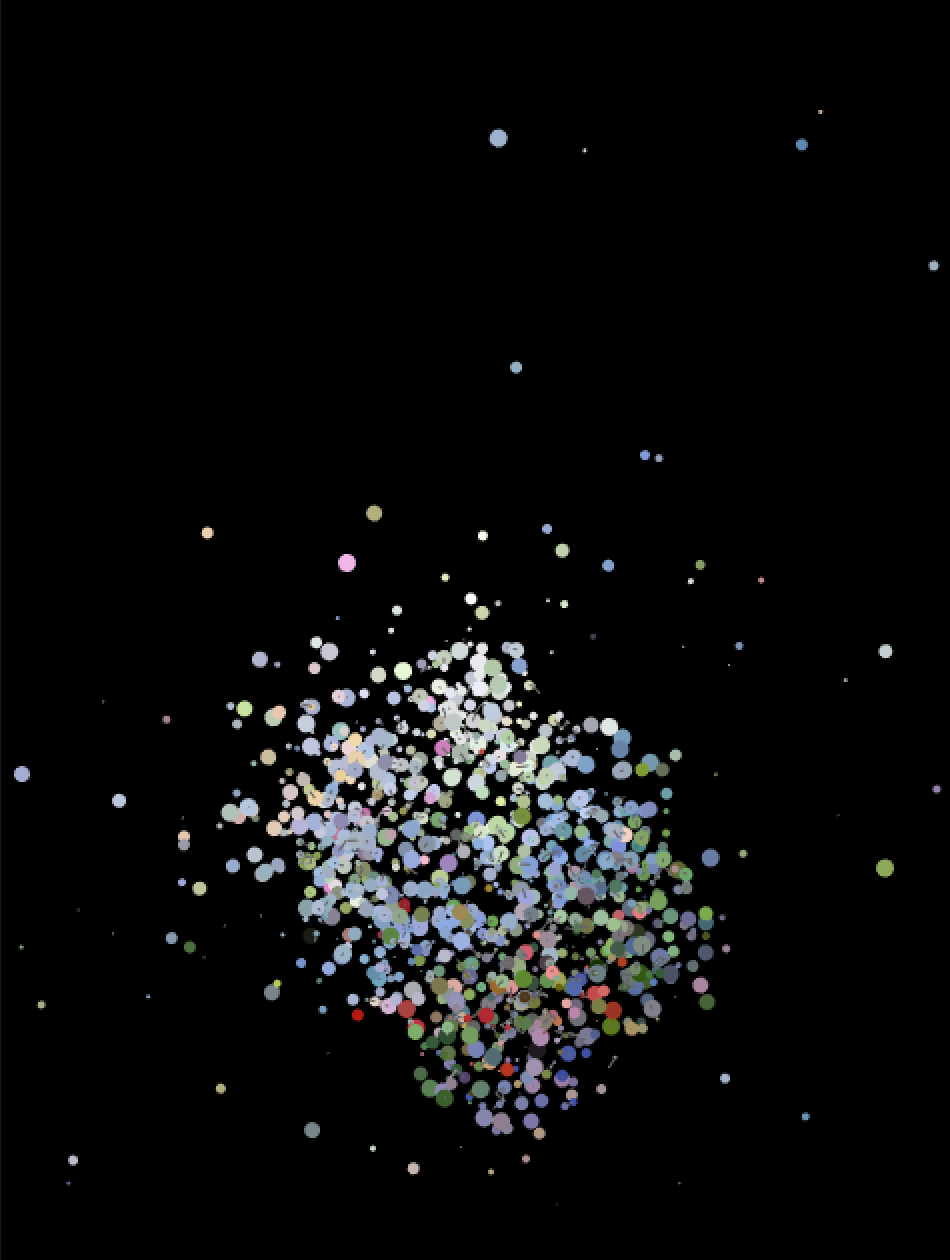

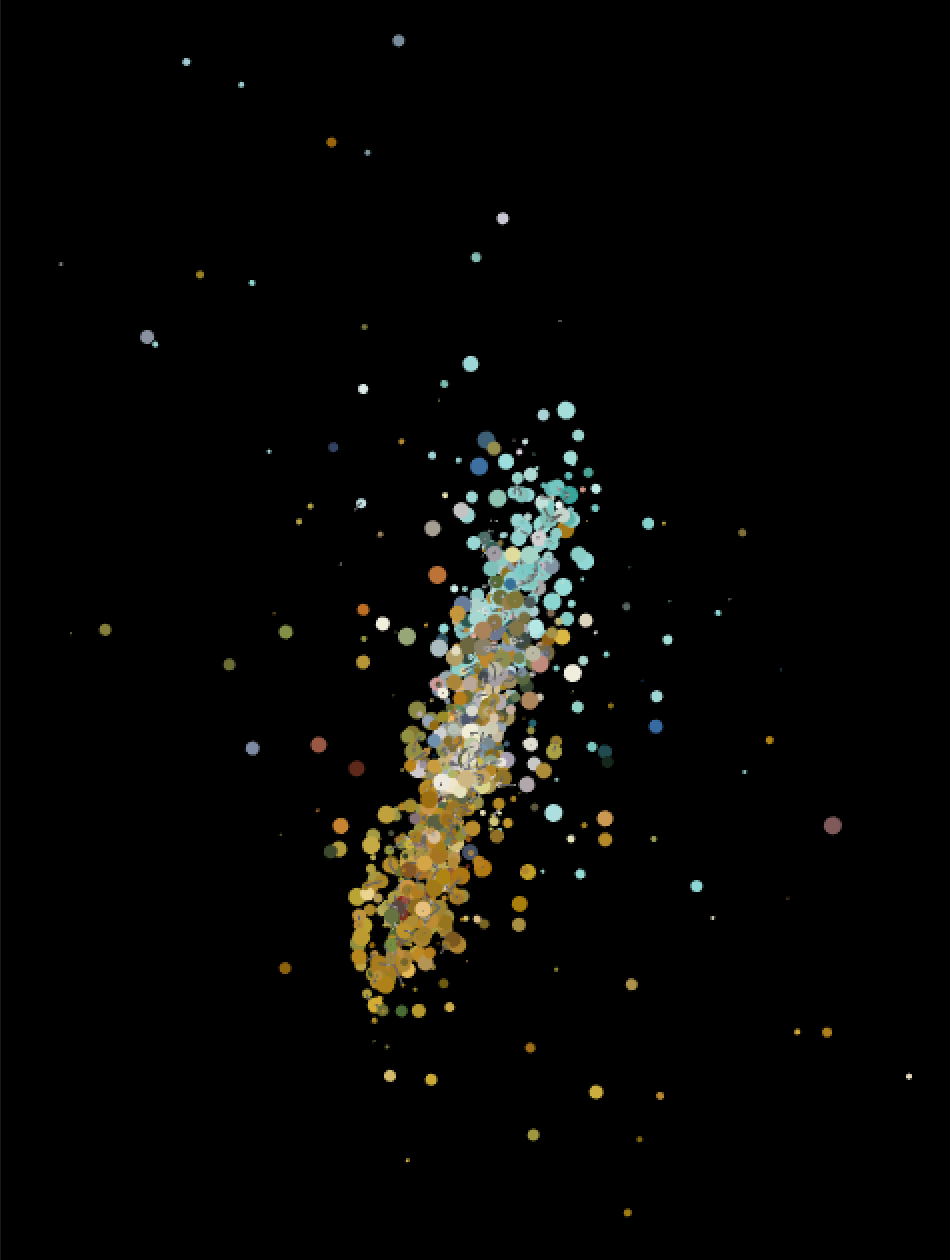

Step2 Coding Issues2 3D Particle& Colors

3d cams and 3d movements, color gradients

Adding image textures to particles(unfinished)

Taking color from the image pixels

![Tests with some famous paintings include Ingres, Van Gogh, and Monet.]()

![Image from Claude Monet Water Lilies]()

![]()

![]()

![]()

![]()

![]()

![]()

Step3 Establishing a City Radio

As we accqure serenity in classic art and paintings, noise, chaos, digital sound is the obsite side of these artworks.

Instead, I want to capture the chaos, noises, uprisings in our city life.

![https://www.rtl-sdr.com/rtl-sdr-quick-start-guide]() In day 4, I am building a radio to receive, analyze my nearby ‘invisible’ noise and will be prepared later to add them onto interfering the images of the city.

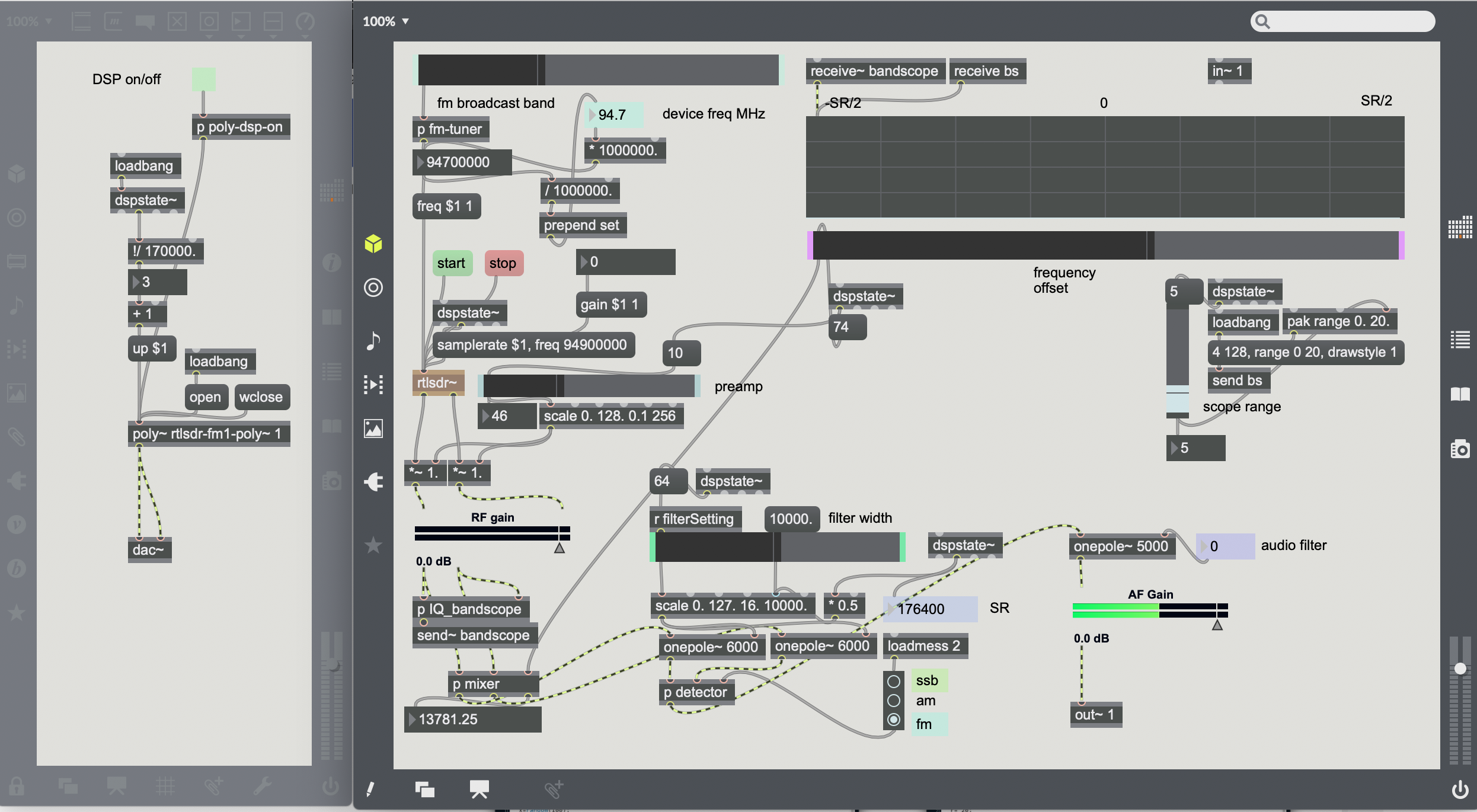

In Maxmsp Scope, I establish a radio analysis app.

In day 4, I am building a radio to receive, analyze my nearby ‘invisible’ noise and will be prepared later to add them onto interfering the images of the city.

In Maxmsp Scope, I establish a radio analysis app.

![]()

![Establishing a little radio station in MaxMSP]()

![Scope in MAXMSP]()

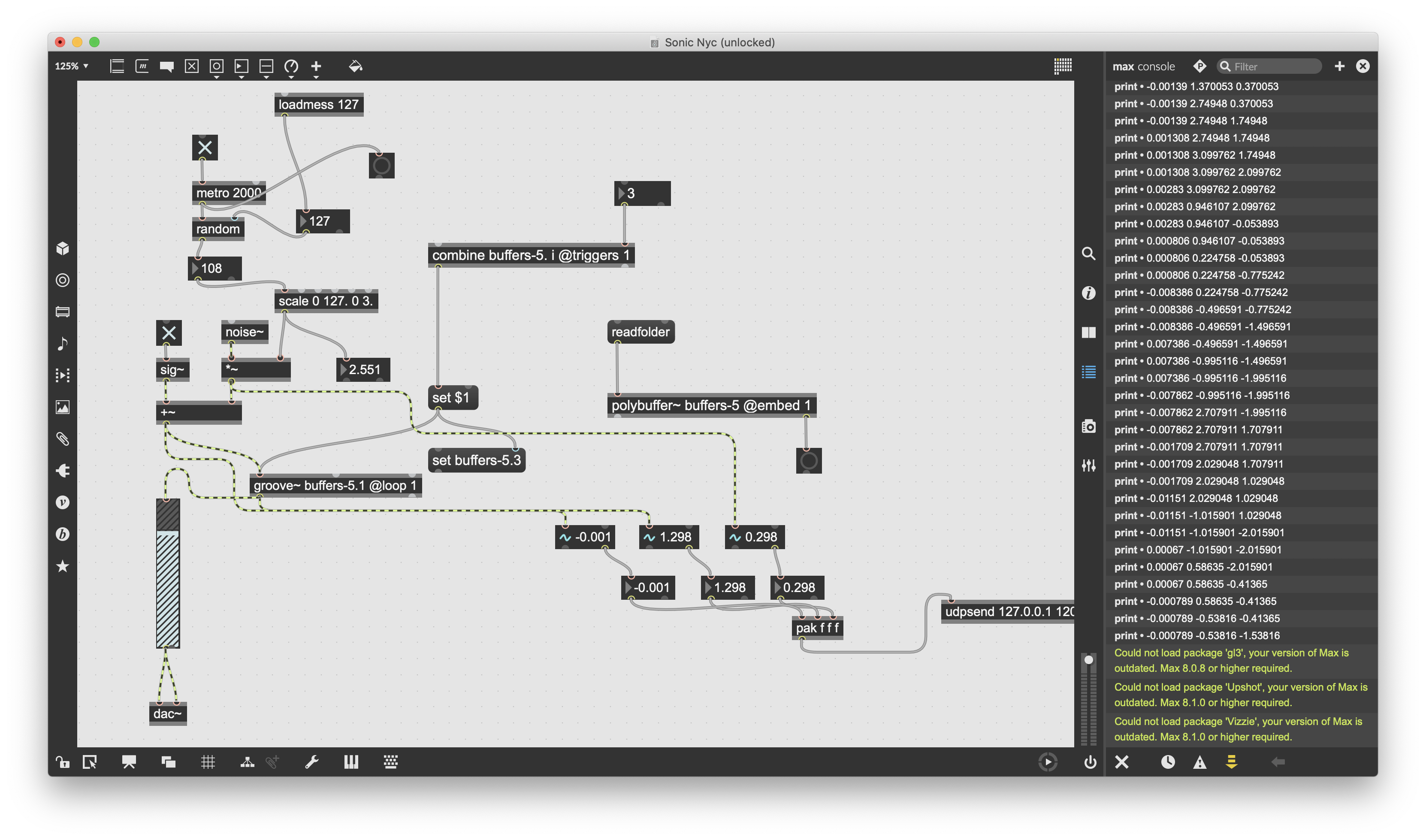

Step4 Communicator ( Data Parsing & Controls)

Data input in processing side, using osc.

Part1.

Building a max side of message sender

Part2.

In processing, adding osc message reader.

Part3.

Transfering the radio data to affect real camera Vision

Step5 Sound Design, Layers of sound

Building up a small radio station of New Yorkusing open sound sources from aporgee.org

Step5

In the last step, it turns out a lots of problem to use the extenal for max to build a radio and accquire data from them.

Therefore, instead, In day 6, I create a online radio mixer of different soundscapes from different location of New York City based on sound mixer at https://aporee.org. Aiming to collect the ‘noise’ from New York City.

The ready record sound are from open sound sharing platform, selected from several locations, such as central park, pier at chelsea, brooklyn, long island city, chinatown, and hudson river, etc.. I decided to compile these sound clips together and mixing as a life radio of our city life.

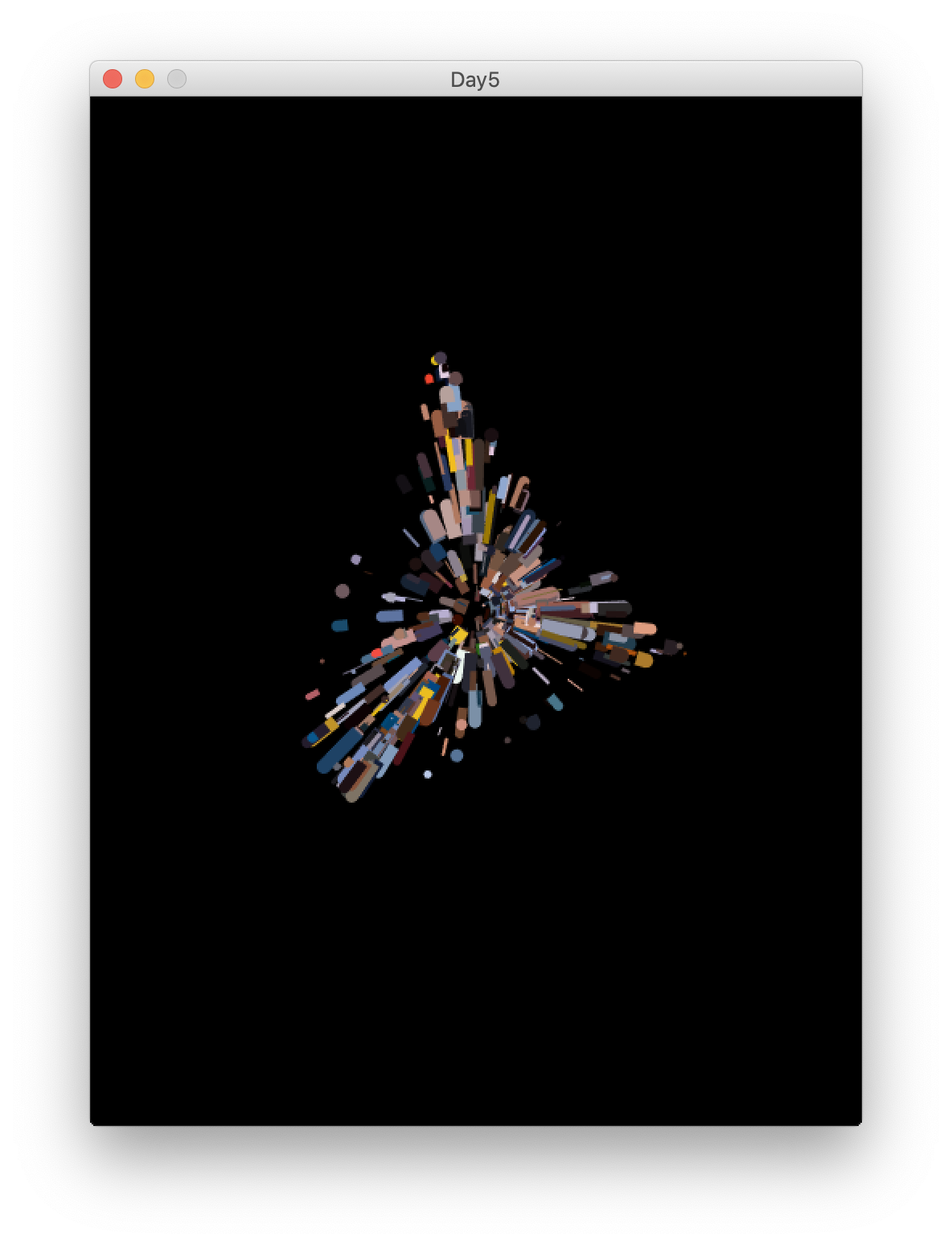

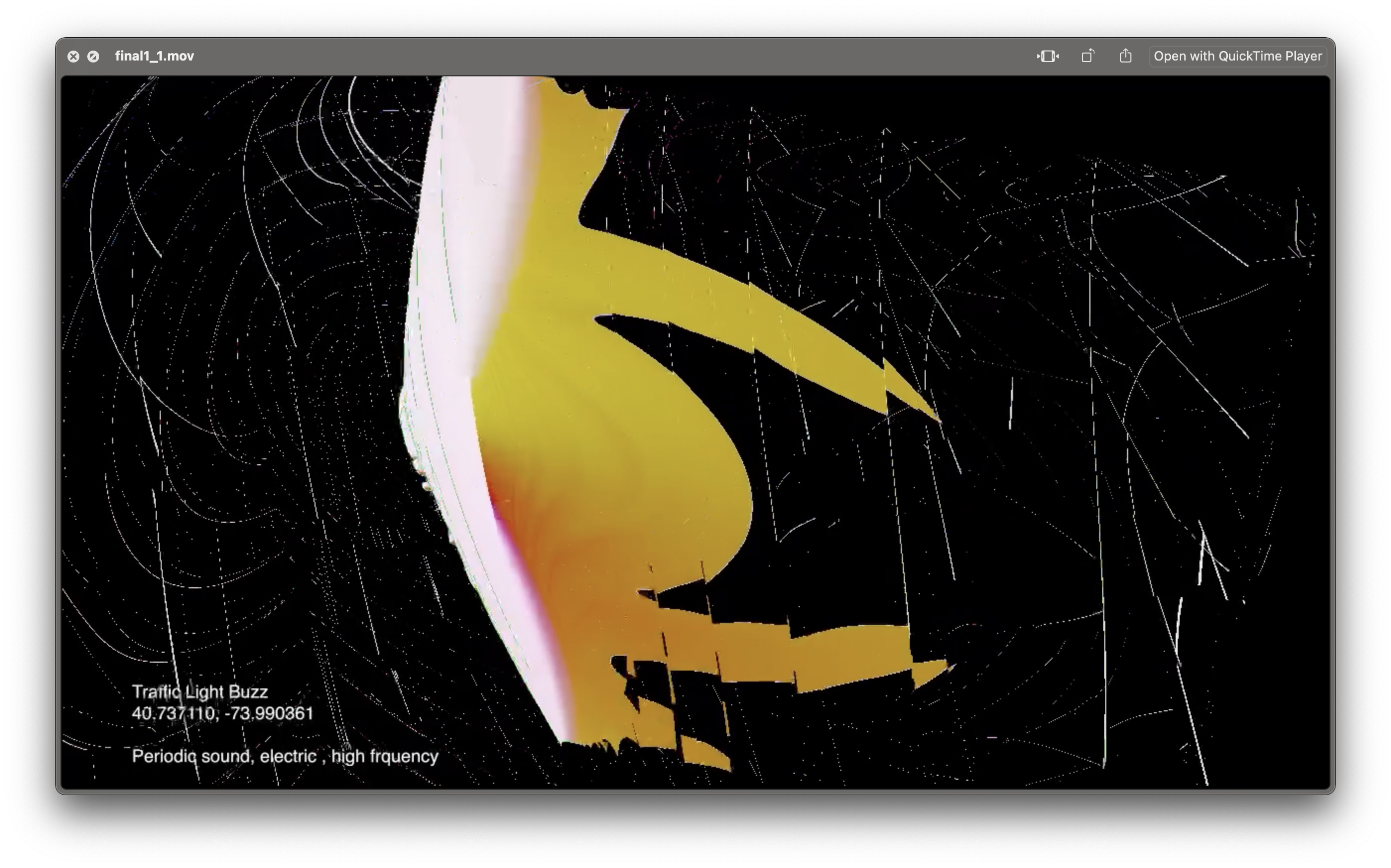

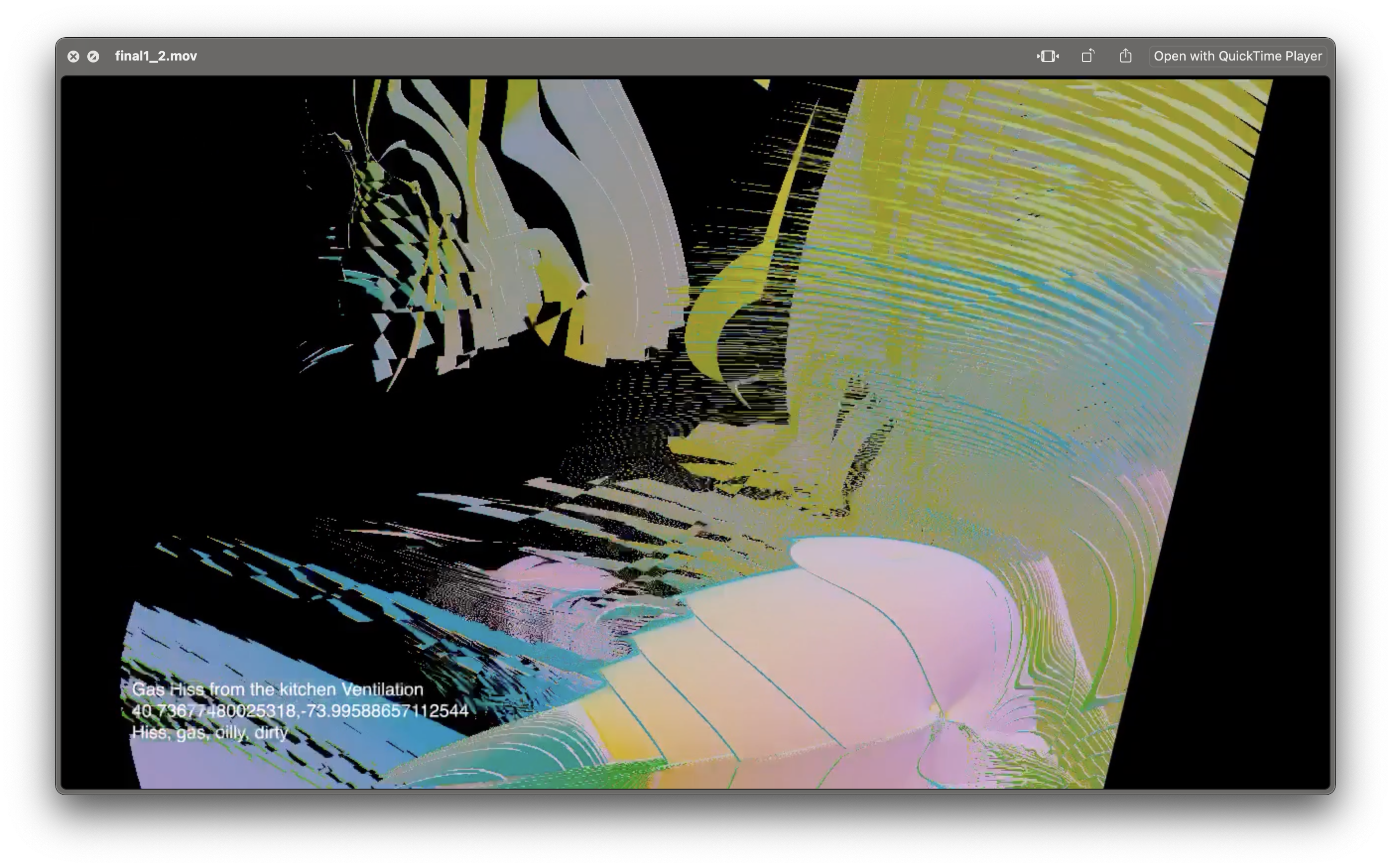

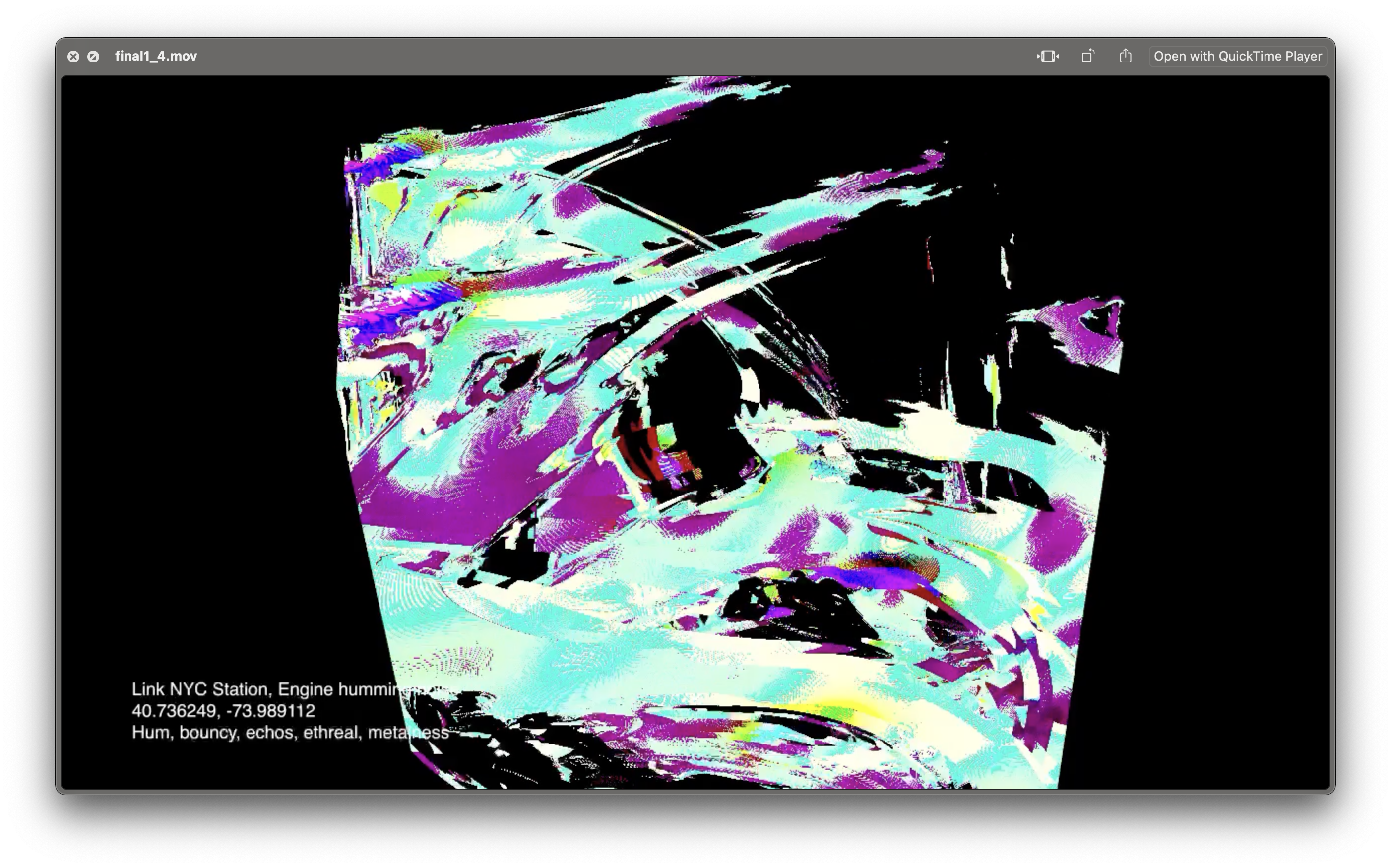

Outcome: Audio-Visualization of New York City Soundscape

![Visualization of sound taken from the image on the right]()

![A Protest Image from Google Image]()

Processing

Step2 Coding Issues2 3D Particle& Colors

3d cams and 3d movements, color gradients

Adding image textures to particles(unfinished)

Taking color from the image pixels

Step3 Establishing a City Radio

As we accqure serenity in classic art and paintings, noise, chaos, digital sound is the obsite side of these artworks.

Instead, I want to capture the chaos, noises, uprisings in our city life.

Step4 Communicator ( Data Parsing & Controls)

Data input in processing side, using osc.

Part1.

Building a max side of message sender

Part2.

In processing, adding osc message reader.

Part3.

Transfering the radio data to affect real camera Vision

Step5 Sound Design, Layers of sound

Building up a small radio station of New Yorkusing open sound sources from aporgee.org

Step5

In the last step, it turns out a lots of problem to use the extenal for max to build a radio and accquire data from them.

Therefore, instead, In day 6, I create a online radio mixer of different soundscapes from different location of New York City based on sound mixer at https://aporee.org. Aiming to collect the ‘noise’ from New York City.

The ready record sound are from open sound sharing platform, selected from several locations, such as central park, pier at chelsea, brooklyn, long island city, chinatown, and hudson river, etc.. I decided to compile these sound clips together and mixing as a life radio of our city life.

Outcome: Audio-Visualization of New York City Soundscape

A Central Park Sound Image

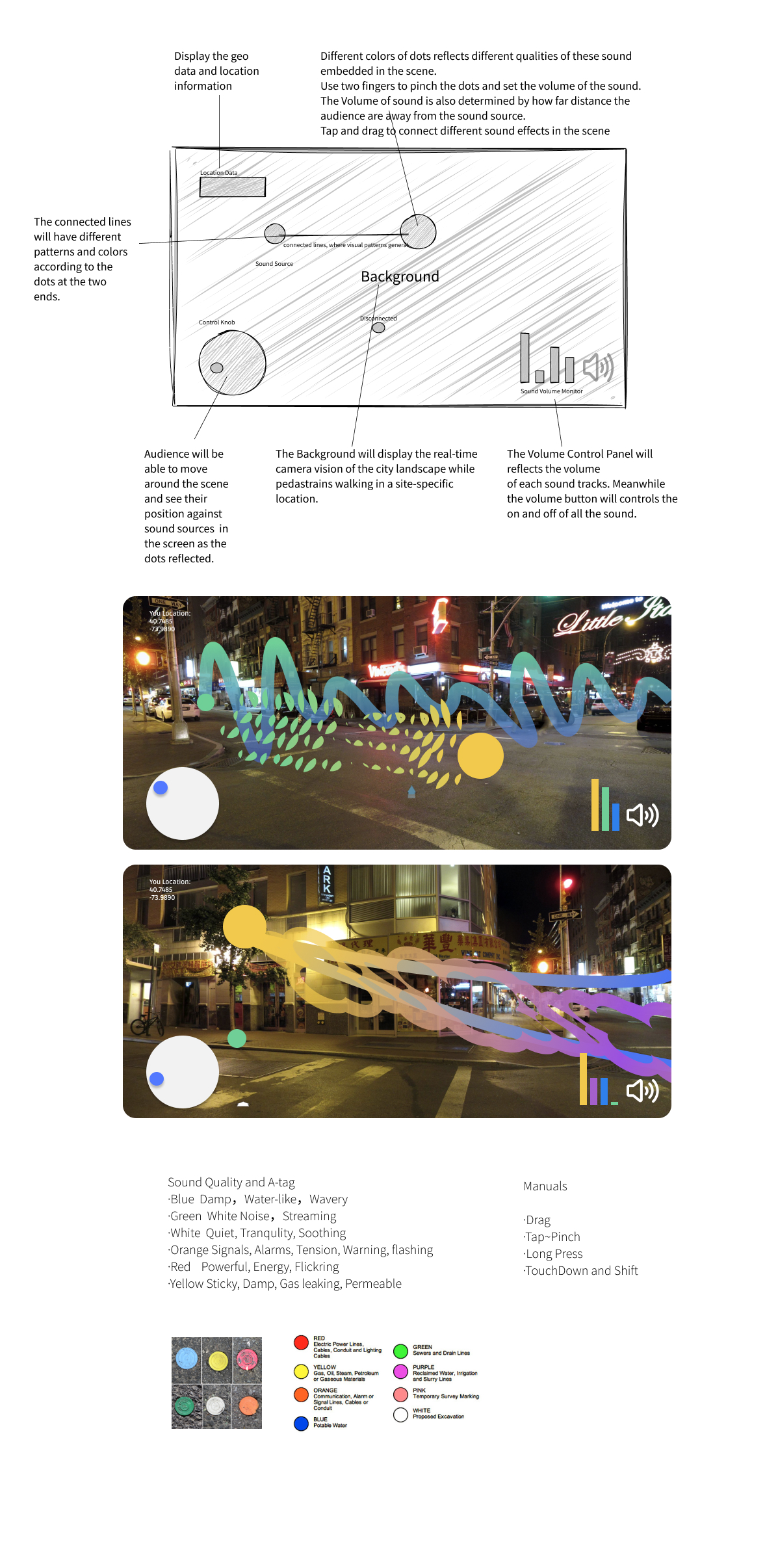

Locational-based WebAR Research

Thesis Research

Highlights:

Locational-Based AR,

WebXR,

Spatial Sound,

Interaction Design

UI Interface

Project Github︎︎︎

This is the Thesis research paper, focusing on analyzing sound, defining materials, and searching for solutions to build a location-based WebAR project.

For the entire project, please read through my thesis paper.︎

![]()

![]()

![]()

![]()

Audio Responsive Visuals, test in Touchdesigner, build with Shaderpark

Issue1: How to embed these 3d shaders to locational based webAR?

Locational AR

When searching for the solution on how to make a locational-based AR, I found A-frame and AR.js, see links( Aframe AR.js ) are suitable for this project. Therefore in taking Vuejs as the skeleton of my web app, I start to combine aframe and arjs to my frame work and try to display webgl shader to the app.

Here are some tests that I did in pure js by following Nicolò Carpignoli’s Build your Location-Based Augmented Reality Web App.

Here is what I build in the test, and the result are deployed on github.io.

As the images show, there is a simple model loading in the scene.

Features under development:

![]()

![]()

![]()

![]()

The second step is to add the locational data into the app. In order to let people find all these objects easily, it is necessary to display a pin over each objects.

To run the app, open this link on your phone and agree to use geolocation datas. ︎

Shader in AR

In research, I found shaderpark is a suitable liberary for me to combine different sound-reactive shaders to the AR scene. In shaderpart, with it’s own function createSculpturewithShape, we can easily put the shaders into a scene. I thus create a glitch template(see link) to hold the 3d shaders. Here is the test template and some screenrecordings.

![]()

Issue2: Workable Interface to bring audience best AR experience

XR Interface

Round1:

![]()

Through the user testing, I gradually realize that the current interface is not reasonable in a horizontal view, as the bottom menu bar will take lots of space in a screen, especially, an Andriod phone will have a sticky menu bar sits in the bottom. Besides, user are more familiar with holding a phone vertically and swinging around to scan their environment. I decide to disregard my old interface design and take the transparent menu bar style to increate the camera lens.

Issure3: Sound Analysis/ Materials/Visualization

Spatial Sound

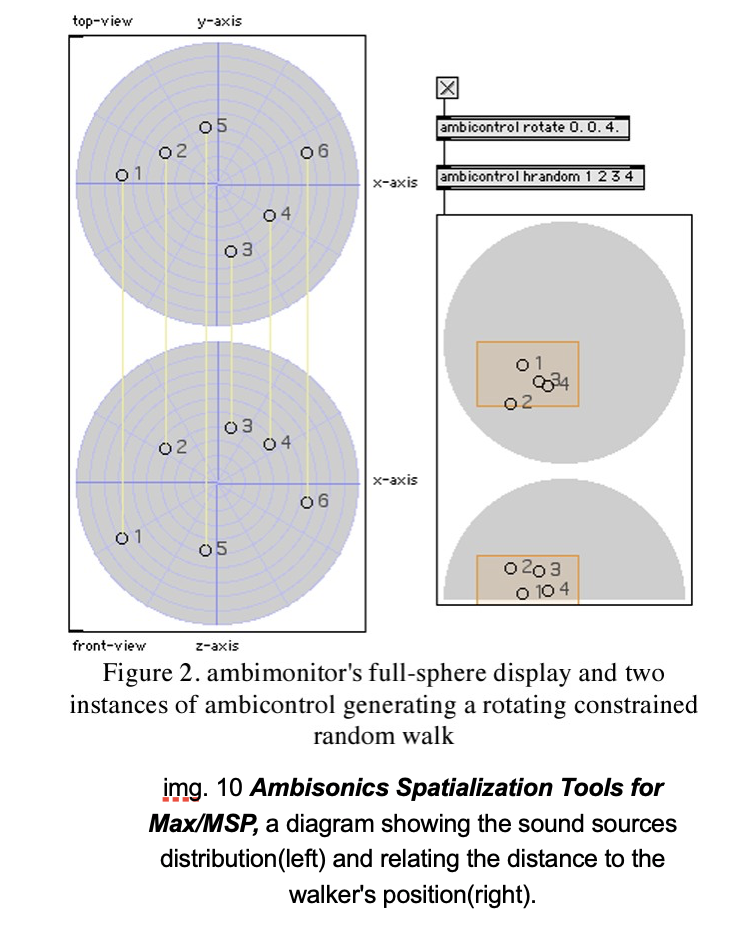

To create an immersive sound experience, this project applies the ambisonic sound theory created by the Institute for Computer Music and Sound Technology, Zurich University of the Arts. In their discourse, Ambisonics Spatialization Tools for Max/MSP, it states that when creating the ideal immersive sound environment, the sound waves will emit in a spherical space harmonics, which they called Ambisonic(see image below).

![]()

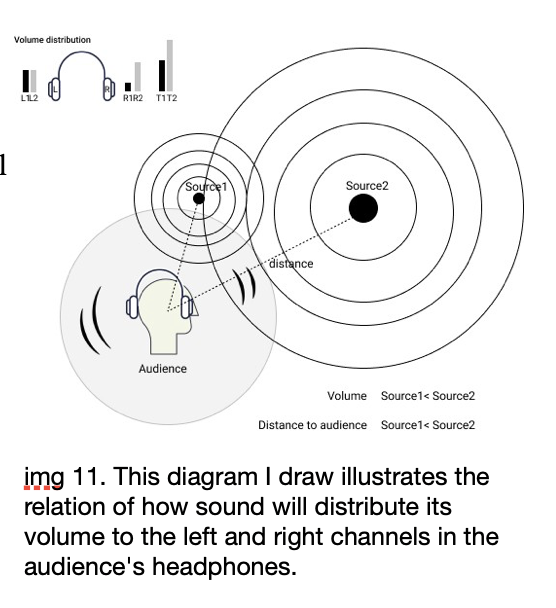

In the field of sound, the volume and channels of sound are strongly bonded to the positions between the listener and the sound sources. In this sound field, different sound waves may cancel or reinforce each other; the sound waves come from different directions and merge at some special location: either the sound is disappeared when different sound waves happened to cancel with each other; on the contrary, the sound waves coheres and get even stronger.

Sound sources with different frequencies are also forming a hierarchy in this field. These sounds will co-exist since our ears can easily tell the difference between them. For example, just as hot air floats to the top of a room, cold air sinks to the bottom. The sound that is more rhythmic or with special features, such as a sudden siren or clunking metal sound, is more obvious than sound with flat frequencies, such as the electric current noise.

Design the spatial sound

![]()

To realize this spatial sound feature, I used A-frame sound library to build the acoustic sound space, mimicking the real hearing experience. A-frame is an open-source web XR framework. It is based on Three.js a Web 3D library based on Javascript.Once the sound has been started in an aframe scene, it will generate a distance calculation between itself and our location to determine how loud we cloud to hear the sound. Another significant feature is that when we walk around the sound with headphones plugged in, we cloud easily detect the left-to-right channel shift.

Sound Analysis

New York has become the noisiest city globally.The richness of soundscapes brings the advance to collect different noises. Its falling and rising sirens and traffic noise have raised the sound spectrum to an elevated level as people have become used to this noise as part of their quotidians. However, besides that noise, the city is full of street music, talking, speeches, and vivid living noise, which turned itself into a large auditorium library. They suddenly jump into an interesting and unique sound to break everyday life and create their open narratives. In the article, the author describes that city soundscapes have these features: some sounds have a noticeable foreground, background, perspective, and movement.

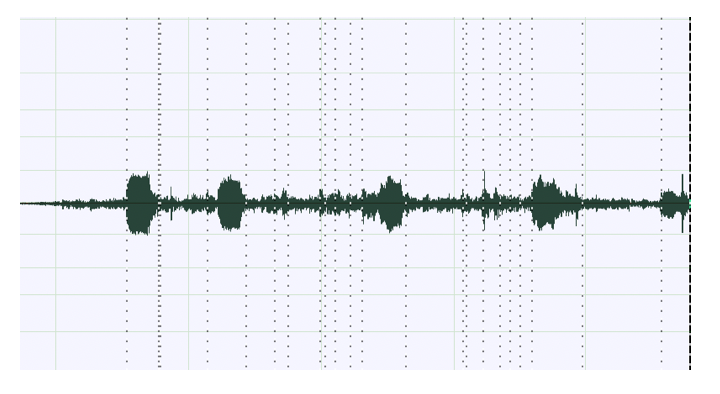

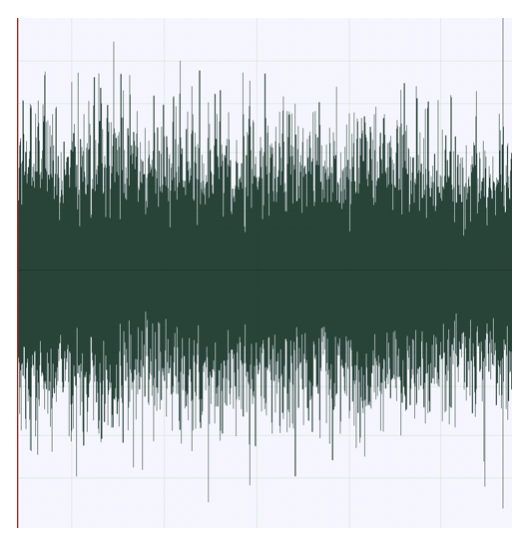

It is easy to read from these spectrum images that the left two sound is less on dampness and reverbs than the right two. But they are more rhythmic than the two soundtracks shown on the left.

As another important part of the AR interaction, the visual patterns will finally be determined by the qualities of sound. The following steps demonstrate how I visualized the sound to match the quality of sound to the color of A-tags.

A-tags will reflect the properties of a sound. Let me explain it in the following part.

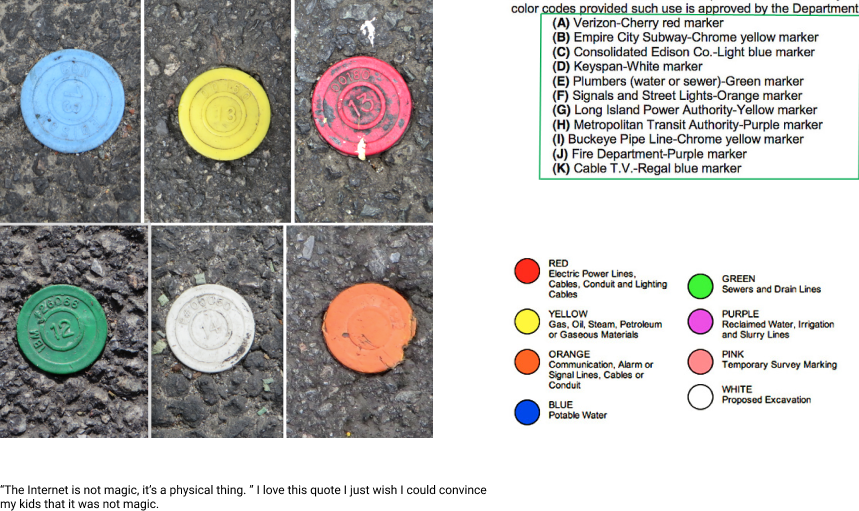

Such categories become an efficient way for me to tag the key features of the noise sound that I collected:

![a. periodic noise, beeps of an elevator]()

![b. noise of electricity from a lighted bulb]()

![c. a hissing manhole from the road bouncing in concretes]()

![d. hissing gas from the building pipes with stronger recursion]() For instance, if the sound is related to waterworks, such as sewer pipe sound; or formed in the space with rich reverbs, like a large water storage tank, then I will tag these sounds with blue A-tags, to mark their water-like qualities. If they are like, the blub sound that I mentioned, with the features of high frequency buzzing, I can tag these sounds by using the orange tags, to show their electric current features. If the sound comes from the friction with air and infrastructures or pipes, usually are plastic water sewer, these could be tagged as with plastics features, such A-tags are yellow(oil and gas)and purple (reclaimed water, muddy feeling). Lastly, some sounds with metal properties (bouncy, high reverb, strident clinks of construction works), could be tagged with the powerful orange color, with the meaning of communication, alarms, and warnings.

For instance, if the sound is related to waterworks, such as sewer pipe sound; or formed in the space with rich reverbs, like a large water storage tank, then I will tag these sounds with blue A-tags, to mark their water-like qualities. If they are like, the blub sound that I mentioned, with the features of high frequency buzzing, I can tag these sounds by using the orange tags, to show their electric current features. If the sound comes from the friction with air and infrastructures or pipes, usually are plastic water sewer, these could be tagged as with plastics features, such A-tags are yellow(oil and gas)and purple (reclaimed water, muddy feeling). Lastly, some sounds with metal properties (bouncy, high reverb, strident clinks of construction works), could be tagged with the powerful orange color, with the meaning of communication, alarms, and warnings.

To embed the ambesonic sound to this project, I found aframe has its own sound function to make the spatial sound feasible in a browser.

A-tags& Visualization

![]()

![]()

![]()

![]()

![]()

As another important part of the AR interaction, the visual patterns will finally be determined by the qualities of sound. The following steps demonstrate how I visualized the sound to match the quality of sound to the color of A-tags.

![]()

Finally, I used Shader-Park-Core as the 3D library to build the sound-reactive shader.

To determine the color, shine, and metal of a sound, here I used three Shader-Park defined functions to generate the materials for the 3D sound sculpture. In the image below, it shows how to use shine(), metal(), and occlusion() functions to make a series of materials transit from metal to chalk.

Besides, with the input() function in Shader-park, it is easy to define customized variables such as time and sound features. So that it can acquire the sound volume or frequency values from a frame sound object, and relate the 3D visualization to a sound-reactive or sound-driven form. For example:

![]()

In the ShaderPark, it converts the GLSL shader into simple javascript. If I want to apply the above values to the shapes, I can simply do it this way:

![]()

Finally we will get these sound-reactive shaders.By applying sound analysis, we can input the value to these shaders, so that it will make these objects audio-responsive.

For the entire project, please read through my thesis paper.︎

Audio Responsive Visuals, test in Touchdesigner, build with Shaderpark

Issue1: How to embed these 3d shaders to locational based webAR?

Locational AR

When searching for the solution on how to make a locational-based AR, I found A-frame and AR.js, see links( Aframe AR.js ) are suitable for this project. Therefore in taking Vuejs as the skeleton of my web app, I start to combine aframe and arjs to my frame work and try to display webgl shader to the app.

Here are some tests that I did in pure js by following Nicolò Carpignoli’s Build your Location-Based Augmented Reality Web App.

Here is what I build in the test, and the result are deployed on github.io.

As the images show, there is a simple model loading in the scene.

Features under development:

- menu bar,

- sound,

- popup message box...

The second step is to add the locational data into the app. In order to let people find all these objects easily, it is necessary to display a pin over each objects.

To run the app, open this link on your phone and agree to use geolocation datas. ︎

Shader in AR

In research, I found shaderpark is a suitable liberary for me to combine different sound-reactive shaders to the AR scene. In shaderpart, with it’s own function createSculpturewithShape, we can easily put the shaders into a scene. I thus create a glitch template(see link) to hold the 3d shaders. Here is the test template and some screenrecordings.

Issue2: Workable Interface to bring audience best AR experience

XR Interface

Round1:

Through the user testing, I gradually realize that the current interface is not reasonable in a horizontal view, as the bottom menu bar will take lots of space in a screen, especially, an Andriod phone will have a sticky menu bar sits in the bottom. Besides, user are more familiar with holding a phone vertically and swinging around to scan their environment. I decide to disregard my old interface design and take the transparent menu bar style to increate the camera lens.

Issure3: Sound Analysis/ Materials/Visualization

Spatial Sound

To create an immersive sound experience, this project applies the ambisonic sound theory created by the Institute for Computer Music and Sound Technology, Zurich University of the Arts. In their discourse, Ambisonics Spatialization Tools for Max/MSP, it states that when creating the ideal immersive sound environment, the sound waves will emit in a spherical space harmonics, which they called Ambisonic(see image below).

In the field of sound, the volume and channels of sound are strongly bonded to the positions between the listener and the sound sources. In this sound field, different sound waves may cancel or reinforce each other; the sound waves come from different directions and merge at some special location: either the sound is disappeared when different sound waves happened to cancel with each other; on the contrary, the sound waves coheres and get even stronger.

Sound sources with different frequencies are also forming a hierarchy in this field. These sounds will co-exist since our ears can easily tell the difference between them. For example, just as hot air floats to the top of a room, cold air sinks to the bottom. The sound that is more rhythmic or with special features, such as a sudden siren or clunking metal sound, is more obvious than sound with flat frequencies, such as the electric current noise.

Design the spatial sound

To realize this spatial sound feature, I used A-frame sound library to build the acoustic sound space, mimicking the real hearing experience. A-frame is an open-source web XR framework. It is based on Three.js a Web 3D library based on Javascript.Once the sound has been started in an aframe scene, it will generate a distance calculation between itself and our location to determine how loud we cloud to hear the sound. Another significant feature is that when we walk around the sound with headphones plugged in, we cloud easily detect the left-to-right channel shift.

Sound Analysis

New York has become the noisiest city globally.The richness of soundscapes brings the advance to collect different noises. Its falling and rising sirens and traffic noise have raised the sound spectrum to an elevated level as people have become used to this noise as part of their quotidians. However, besides that noise, the city is full of street music, talking, speeches, and vivid living noise, which turned itself into a large auditorium library. They suddenly jump into an interesting and unique sound to break everyday life and create their open narratives. In the article, the author describes that city soundscapes have these features: some sounds have a noticeable foreground, background, perspective, and movement.

It is easy to read from these spectrum images that the left two sound is less on dampness and reverbs than the right two. But they are more rhythmic than the two soundtracks shown on the left.

As another important part of the AR interaction, the visual patterns will finally be determined by the qualities of sound. The following steps demonstrate how I visualized the sound to match the quality of sound to the color of A-tags.

A-tags will reflect the properties of a sound. Let me explain it in the following part.

Such categories become an efficient way for me to tag the key features of the noise sound that I collected:

To embed the ambesonic sound to this project, I found aframe has its own sound function to make the spatial sound feasible in a browser.

A-tags& Visualization

As another important part of the AR interaction, the visual patterns will finally be determined by the qualities of sound. The following steps demonstrate how I visualized the sound to match the quality of sound to the color of A-tags.

Finally, I used Shader-Park-Core as the 3D library to build the sound-reactive shader.

To determine the color, shine, and metal of a sound, here I used three Shader-Park defined functions to generate the materials for the 3D sound sculpture. In the image below, it shows how to use shine(), metal(), and occlusion() functions to make a series of materials transit from metal to chalk.

Besides, with the input() function in Shader-park, it is easy to define customized variables such as time and sound features. So that it can acquire the sound volume or frequency values from a frame sound object, and relate the 3D visualization to a sound-reactive or sound-driven form. For example:

Finally we will get these sound-reactive shaders.By applying sound analysis, we can input the value to these shaders, so that it will make these objects audio-responsive.

Make a Low-Poly Forest

2020This is a 3D experience with a forest while the audience could go through that scene and control the elements in a forest, such as weather and planting...

This program is based on Three.js and Javascript. It is compatible with any web-based platform.

1 1&1/2

River Project in Prague2018

You are cordially invited to the presentation of the multichannel installation One&One&aHalf, which links the soundscapes of two distant rivers: the Vltava in Prague and Huangpu in Shanghai. The listener, walking in the gallery space defined by field recordings, influences the volume of 5 channels of sound. When a visitor approaches a speaker, the soundscape slowly fades away, disappearing in the distance, and the volume of the opposite soundscapes increase. The movement of the visitor also triggers different soundtracks. The visual aspect of the installation is the actual vista of Vltava through the gallery windows.

When arriving in Prague a few months ago, I started to inquire about how to compare the soundscapes of Prague and Shanghai. I collected field recordings of the rivers, including sounds of traffic at the waterfront, its ecologies, and the voices of pedestrians and birds. Shanghai is the symbol of the modern city in the East, while Prague is the symbol of the continuity of tradition in the West. However, this work is not a comparison of the soundscapes of the two places. I am aimed at blending the two audio images. From the merging of soundscapes from these two cities emerges a new space that is neither Prague nor Shanghai. It is a hybrid virtual space with personal memories of living in a waterfront city, oblivious to the moods of the giant ecosystem.

Ideas and Design:

The project is to connect in multichannel installation field recordings from Vltava(Prague) and Huangpu River(Shanghai). Meanwhile it engages the participants’ interactivity with sound.

Technology:

Processing, Kinect V2& V1, OSC library, Speakers, Soundcard, and MaxMSP

All the sound sources of this project are recorded by Zhijun Song.

Special thanks to Rose Zhang and Quynh Nguyen for assisting with recordings. Also speical thanks to Miloš Vojtěchovský, Sara Pinheiro, and Martin Klusák for techinical supportings.

Special thanks to Rose Zhang and Quynh Nguyen for assisting with recordings. Also speical thanks to Miloš Vojtěchovský, Sara Pinheiro, and Martin Klusák for techinical supportings.

About Zvuky Prahy

It is a platform that collects all the field recording sound of in Prague.

For original sound tracks of this project, please see https://sonicity.cz/en/sounds?uid=SongZhijun&tid=

It is a platform that collects all the field recording sound of in Prague.

For original sound tracks of this project, please see https://sonicity.cz/en/sounds?uid=SongZhijun&tid=